The New Compliance Frontier: Structuring Your Organization for the AI Era

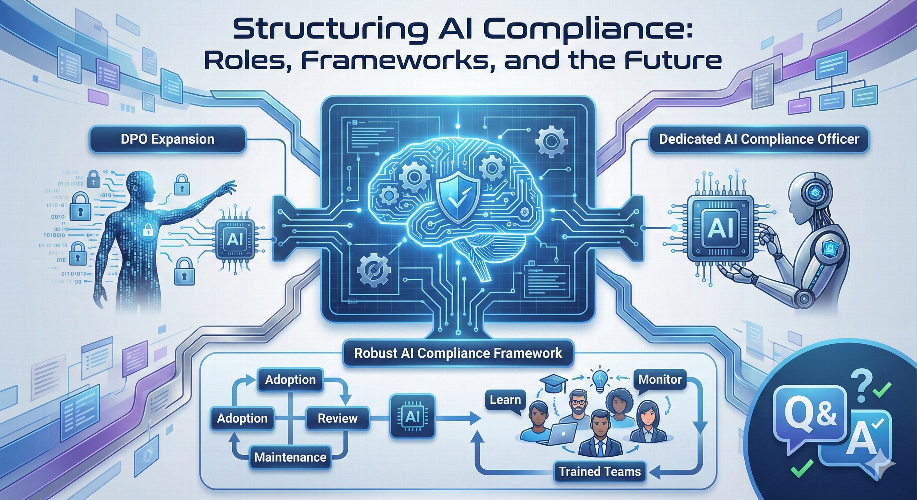

With Artificial Intelligence (AI) moving from a niche experiment to a core operational engine, the "set it and forget it" approach to compliance is obsolete. Organizations are now facing a critical structural question: Do we stretch our current data protection roles, or do we build something entirely new?

This guide breaks down the structural options, the "DPO vs. AI Officer" debate, and how to build a living framework that evolves as fast as the technology itself.

Part 1: Structural Dilemma – Dedicated Officer vs. The DPO

The most common question organizations face is whether the Data Protection Officer (DPO) can simply absorb AI compliance. While there is overlap, the roles are fundamentally different in scope.

The Comparison: DPO vs. AI Compliance Officer (AICO)

| Feature | Data Protection Officer (DPO) | AI Compliance Officer (AICO) |

|---|---|---|

| Primary Focus | Privacy, Global Data Privacy Laws, Personal Data Rights. | Model Safety, Bias, Ethics, Output Accuracy, IP. |

| Key Risk | Data leaks, unauthorized processing. | "Hallucinations," discrimination, drift, automation bias. |

| Scope | Personal data (PII). | Any data (personal, proprietary, or public) fed into models. |

| Skill Set | Legal, Privacy Law, IT Security. | Data Science basics, Ethics, Risk Management, Legal. |

The Verdict: Can the DPO do it?

Yes, but with caveats.

For Low-Maturity Orgs: If you only use off-the-shelf tools (e.g., ChatGPT Enterprise) for basic tasks, a DPO with upskilling can manage this.

For High-Maturity Orgs: If you are building models, fine-tuning LLMs, or using AI for high-impact decisions (hiring, lending, healthcare), the DPO will likely be overwhelmed. AI risks (like model drift) are technical, not just legal.

Recommendation: For most mid-to-large organizations, the best approach is often a "Matrix Structure." Appoint a dedicated AI Governance Lead who sits within the Risk or Compliance function but reports dotted-line to the DPO on privacy matters and the CTO on technical matters.

Part 2: Building a Robust AI Compliance Framework

Instead of relying on a single "sheriff," you should build a framework that decentralizes responsibility while centralizing oversight. A robust framework rests on four pillars:

1. The Governance Gate (The "Green Light" Process)

Before any AI tool is adopted, it must pass a triage process.

- Intake Form: Any department wanting to use an AI tool must fill out a simple intake form: What data goes in? What decisions come out? Is a human in the loop?

- Risk Tiering: Classify the tool automatically:

- Low Risk: Productivity tools (e.g., meeting summarizers). -> Auto-approve with guidelines.

- High Risk: Decision tools (e.g., resume screening). -> Requires full committee review.

2. The "Human-in-the-Loop" Protocol

Define exactly where humans must intervene.

- Review: Humans must verify AI outputs before they are sent to customers.

- Override: Staff must have the authority (and technical ability) to override an AI decision without fear of reprimand.

3. Continuous Monitoring (The "Drift" Check)

AI models degrade over time (model drift). Compliance is not a one-time stamp.

- Quarterly Audits: specific checks for bias (is the model rejecting one demographic more than others?).

- Feedback Channels: An easy way for employees to flag "weird" AI behavior to the compliance team.

4. Vendor Compliance

Most organizations buy rather than build. Your framework must include a Vendor AI Addendum asking:

- Do you train your models on our data? (The answer should ideally be no).

- How often do you test for bias?

Part 3: Operationalizing – Training & Maintenance

You cannot hire enough compliance officers to watch every employee. The only scalable solution is to train your teams to be the "first line of defense."

The Training Curriculum

Split your training into tiers based on roles:

| Audience | Training Focus | Key Learning Outcome |

|---|---|---|

| General Staff | AI Hygiene | Never put PII /IP into poublic chatbots and always verify outputs |

| Managerial | Risk Spotting | Recognizing when a process is too risky for automation; signing off on AI use. |

| Developers/Data | Technical Ethics | Preventing data poisoning; documenting model lineage; bias testing. |

The "Review and Maintenance" Workflow

Establish a Quarterly AI Review Board (AIRB).

- Who: Legal, DPO, IT Security, and Business Unit Heads.

- Agenda:

1: Review usage logs of approved tools (is adoption matching intent?).

2: Review "Shadow AI" reports (what unapproved tools are hitting the network?).

3: Assess regulatory updates (e.g., new EU AI Act rules).

Part 4: Q&A – Common Questions

Q1: Do we need a "Chief AI Officer" (CAIO)?

A: Not necessarily for compliance. A CAIO is usually a strategic role focused on growth and innovation. Compliance is better housed under a Chief Risk Officer or Legal to ensure independence. If you put compliance under a CAIO who is incentivized to launch products fast, you create a conflict of interest.

Q2: Can we just use our existing GDPR Impact Assessments (DPIAs)?

A: They are a good start but insufficient. A DPIA focuses on data privacy. You need an Algorithmic Impact Assessment (AIA) which asks broader questions: Will this tool reduce human agency? Is the output accurate? Is the model robust against adversarial attacks?

Q3: How do we handle "Shadow AI" (employees using tools secretly)?

A: You cannot block everything. The best approach is "Safe Harbor." Provide an enterprise-licensed, secure version of a generative AI tool (like ChatGPT Enterprise or Copilot) so employees have a safe place to work. If you provide no tools, they will find their own unsafe ones.

Q4: What is the biggest mistake organizations make with AI compliance?

A: Treating AI as a purely "IT problem." If HR uses an AI tool to scan resumes and it discriminates against women, that is a legal and reputational crisis, not a software bug. Compliance must own the process, IT owns the tool.

Conclusion: Moving from Policing to Partnership

Ultimately, the specific title you choose—whether expanding the DPO's remit or appointing a dedicated AI Compliance Officer—matters less than the agility of the framework you build. AI compliance is no longer about static "tick-box" exercises; it requires a dynamic ecosystem where Legal, IT, and HR collaborate continuously. By shifting from a model of reactive policing to one of proactive partnership, and by empowering every employee to act as a steward of AI ethics, your organization can treat compliance not as a roadblock, but as a guardrail that allows for high-speed innovation. In the AI era, the safest organizations will not be the ones that say "no" the most, but the ones that know exactly how to safely say "yes."